Stable Diffusion Prompt Reader

файл на civitaiстраница на civitaiStable Diffusion Prompt Reader

Github Repo:

https://github.com/receyuki/stable-diffusion-prompt-reader

A simple standalone viewer for reading prompts from Stable Diffusion generated image outside the webui.

There are many great prompt reading tools out there now, but for people like me who just want a simple tool, I built this one.

No additional environment or command line or browser is required to run it, just open the app and drag and drop the image in.

Features

Support macOS, Windows and Linux.

Simple drag and drop interaction.

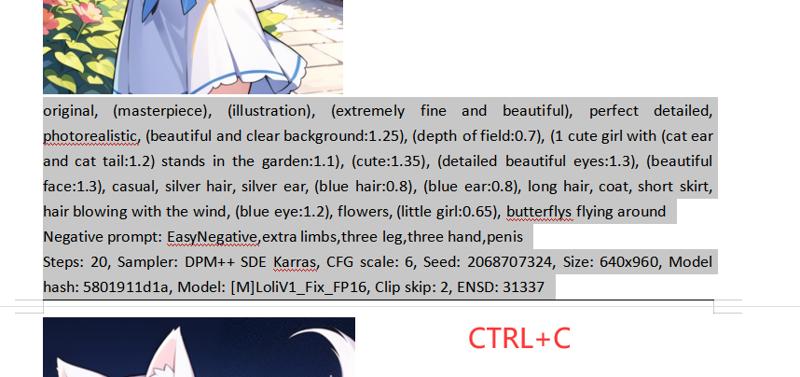

Copy prompt to clipboard.

Remove prompt from image.

Export prompt to text file.

Edit or import prompt to images

Vertical orientation display and sorting by alphabet

Detect generation tool.

Multiple formats support.

Dark and light mode support.

Supported Formats

A1111's webui

PNG

JPEG

WEBP

TXT

Easy Diffusion

PNG

JPEG

WEBP

InvokeAI

PNG

NovelAI

PNG

ComfyUI*

PNG

Naifu(4chan)

PNG

* Limitations apply. See format limitations.

If you are using a tool or format that is not on this list, please help me to support your format by uploading the original file generated by your tool as a zip file to the issues, thx.

Download

For macOS and Windows users

Download executable from above or from the GitHub Releases

For Linux users (not regularly tested)

Usage

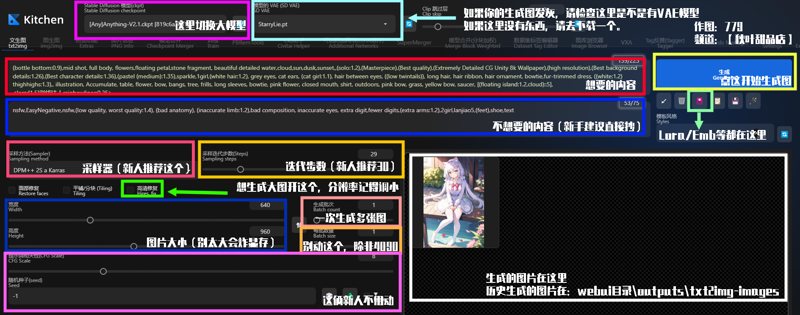

Read prompt

Open the executable file (.exe or .app) and drag and drop the image into the window.

OR

Right click on the image and select open with SD Prompt Reader

OR

Drag and drop the image directly onto executable (.exe or .app).

Export prompt to text file

Click "Export" will generate a txt file alongside the image file.

To save to another location, click the expand arrow and click "select directory".

Remove prompt from image

Click "Clear" will generate a new image file with suffix "_data_removed" alongside the original image file.

To save to another location, click the expand arrow and click "select directory".

To overwrite the original image file, click the expand arrow and click "overwrite the original image".

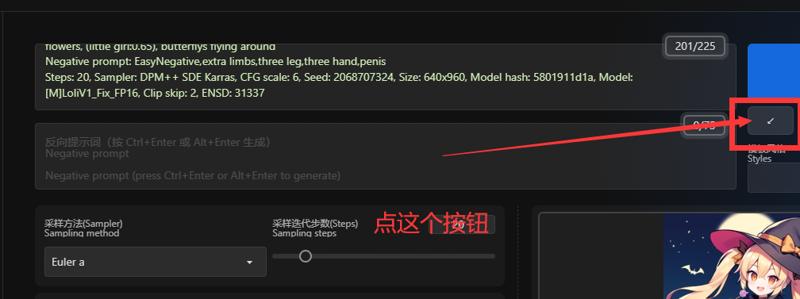

Edit image

Click "Edit" to enter edit mode.

Edit the prompt directly in the textbox or import a metadata file in txt format.

Click "Save" will generate a edited image file with suffix "_edited" alongside the original image file.

To save to another location, click the expand arrow and click "select directory".

To overwrite the original image file, click the expand arrow and click "overwrite the original image".

Format Limitations

TXT

Importing txt file is only allowed in edit mode.

Only A1111 format txt files are supported. You can use txt files generated by the A1111 webui or use the SD prompt reader to export txt from A1111 images

ComfyUI

Support for comfyUI requires more testing. If you believe your image is not being displayed properly, please upload the original file generated by ComfyUI as a zip file to the issues.

If there are multiple sets of data (seed, steps, CFG, etc.) in the setting box, this means that there are multiple KSampler nodes in the flowchart.

Due to the nature of ComfyUI, all nodes and flowcharts in the workflow are stored in the image, including those that are not being used. Also, a flowchart can have multiple branches, inputs and outputs.

(e.g. output hires. fixed image and original image simultaneously in a single flowchart)

SD Prompt Reader will traverse all flowcharts and branches and display the longest branch with complete input and output.

Easy Diffusion

By default, Easy Diffusion does not write metadata to images. Please change the Metadata format in settings to embed to write the metadata to images

TODO

Batch image processing tool

Credits

Inspired by Stable Diffusion web UI

App icon generated using Stable Diffusion with IconsMI

Special thanks to Azusachan for providing SD server